From Gaming to AI: How NVIDIA Became the Backbone of the AI Revolution

From scrubbing dishes to reshaping the future of computing

Hey there 👋

Just as I was writing this piece, news broke out that NVIDIA was the first company in history to hit a $5 trillion market cap. Yup, that’s trillion with a T. With 000,000,000,000 next to a freakin 5.

In the midst of this Artificial Intelligence race, NVIDIA is at the center - creator of the technology and ecosystem that the whole AI world desperately depends upon.

Although, NVIDIA is at the top of the AI game, it still manages to be the leading brand in its roots - PC Graphics. NVIDIA PC graphics cards are as in-demand as their enterprise AI chips. And today I want to unpack what is behind this company in totality….so far.

So, NVIDIA, the company that is as cool as the story of its three co-founders hanging out at a fast-food restaurant living on coffee, talking of solving computer graphics.

NVIDIA is the company that changed gaming as we see it today and it is now quietly powering the world or AI right before our very eyes.

This article is a bit different from the normal ones you read here at SK NEXUS. This is going to be more of a deep-dive into the story of NVIDIA divided into chapters.

There would be 3 main sections of this article and you’re free to jump to the ones you’re more interested in. The sections are:

Chapter 1 - This covers the early history of NVIDIA and how they made their way out of near bankruptcy to a leader in gaming.

Chapter 2 - We’ll talk about the cool technology that made the world depend on NVIDIA.

Chapter 3 - It’s about NVIDIA’s rise in its current form where it is the undisputed king of AI infrastructure that is powering the current AI race. or bubble.

Chapter 0 - Some Key Terms

You thought there are only going to be 3 chapters, huh?

I created this mystery chapter just to introduce some of the key terms that I’m going to repeat again and again in this article going forward.

It’s better to have most readers on the same page so no-one feels left out reading the rest of the article. I would explain the concepts just enough so you can go through this article.

You may choose to skip through this chapter if you’re already familiar with most of the stuff being discussed. However skimming through it won’t hurt.

CPU

It stands for Central Processing Unit and it is the main processor in a computer. It runs tasks in a series by finishing one and then continuing with the other.

CPUs perform general purpose compute which means that they can do all sorts of computations that you need to perform in a computer but not at the fastest speed in all cases.

GPU

It stands for Graphics Processing Unit. It is the chip that handles the graphics inside a computer. It could run a lot of tasks in parallel.

GPUs are for special applications. You can think of them as CPUs that could only perform some tasks but at a really fast speed.

Modern computers have some sort of integrated GPU.

Graphics Card

This term refers to such a GPU that you could add into your computer to expand its graphics functionality.

Graphics cards have the GPU chip inside them, along with some additional components like memory, fans and power modules.

Note: For the nerds out there, we know that GPU is not the same thing as Graphics Card. But in current day and age, the lines have blurred between them.

Neural Network

Neural networks are algorithms that mimic the function of human brain through machine learning.

Neural Networks are the foundation for today’s Artificial Intelligence with applications that go all the way from self-driving, weather prediction, real time speech translation, image generation.

AI services like ChatGPT also rely on neural networks.

Chapter 1 – Humble Beginnings and Gaming Breakthrough

1.1 Founding the company

In the 1990s there were roughly 3 dozen companies working on building graphics chips for the PC, that included giants like IBM and behemoths like Intel.

But today a small startup founded in 1993 reigns above everyone else as the de-facto leader in the making graphics chips for PCs and that is NVIDIA.

NVIDIA was founded in 1993 by Jensen Huang, Chris Malachowsky and Curtis Priem. There is a funny story about the company’s origins.

Fun Fact: The name NVIDIA is a mixture of two words: invidia, the Latin word for envy, and the acronym NV (short for next vision).

Jensen Huang who is NVIDIA’s CEO says that his first job was of a dishwasher at Denny’s. This is what he said at an interview in Stanford University:

“My first job before CEO was a dishwasher....

And I did that very well.”

Jensen recalls that the company was founded at Denny’s (a popular fast-food place in the US). The three co-founders used to visit the restaurant for coffee.

Jensen was working at LSI Logic and during one of the meetings with his fellow co-founders, they decided on founding a company that would work on creating 3D graphics chips for PCs.

The three co-founders agreed to leave their stable jobs and found NVIDIA. The idea of solving computer graphics was tempting as PC gaming was starting to pick pace.

Developers like ‘id Software’ were at the heart of the gaming revolution with revolutionary games like Doom and Quake.

Quake, which was also released by ‘id Software’ started to show how technically impressive PC gaming could be and games like these started to push boundaries for traditional PCs.

This was a realization moment for people like Jensen Huang who saw a huge opportunity not just in video games but also in the rise of computer graphics.

As more and more programs started using the Graphical User Interface (GUI), the need for better graphics started rising, coupled with games which are the most graphically intense applications.

1.2 First major products & gaming breakout

NVIDIA’s first product was the NV1 multimedia chip and it was impressive on the technical front but it was a financial failure.

It took such a big toll on the small company that half of the company had to be laid off.

During this time NVIDIA lost a partnership with the gaming company SEGA to produce chips for their next console. This added to the financial woes.

Still, SEGA was kind enough to invest $5M to NVIDIA which gave it the breathing room to continue on its next chip.

NVIDIA’s next chip that got released in 1997 was the RIVA 128 and it was a success. It supported 2D and 3D graphics and it sold over a million units.

By the time the RIVA 128 was released in August 1997, Nvidia had only enough money left for a month of payroll.

Fun Fact Because of the constant fear of lack of funds the NVIDIA’s unofficial motto was “Our company is thirty days from going out of business”

The company continued with its next chip (RIVA TNT) and it was a success too.

These successes gave the company the breathing room to continue and in 1999, NVIDIA listed as a public company at New York Stock Exchange.

The World’s First (Consumer) GPU

NVIDIA didn’t invent the term GPU which stands for Graphics Processing Unit. It means a chip that is designed specifically for graphics-related applications.

The term had been in use since the 1980s but NVIDIA was the first company to focus on popularizing the term GPU among the consumers.

Before NVIDIA, GPUs were sold mostly to enterprises or big companies like console manufacturers, and normal consumers at large did not know about those.

NVIDIA’s GeForce 256 was a hit because it was the first dedicated graphics card aimed for consumers. It was a drop-in card like any other using the PCI slot in a motherboard.

The GeForce 256 was marketed as the ‘World’s First GPU’ and it was an important product for not just NVIDIA but PC gaming in-general.

This was the time when PC gaming started becoming serious in terms of graphical fidelity and performance.

1.3 Building the brand and ecosystem

Right after their IPO, NVIDIA started building their brand and reputation as the first choice for gamers.

This included strategic partnerships using their reputation and technology. Right after their IPO (in wee 1999), NVIDIA got Microsoft’s $200M contract to build GPU for the XBOX.

NVIDIA focused on partnering with OEMs and other game studios for exclusive titles and hardware deals focused on PC gamers.

After partnering with Microsoft on XBOX, NVIDIA partnered with Sony for helping them develop the RSX chip for the PS3.

NVIDIA also partnered with NASA to develop a Mars simulation for NASA. This experience allowed them to increase experience even outside video games.

Meanwhile, NVIDIA started doing something that no-one else was doing at the time.

NVIDIA was among the first companies to take ownership of writing their own graphics drivers which is the software that allows the GPU to function properly in a computer.

Before that, third-party OEMs would write drivers but NVIDIA early-on took it control and started writing their own graphics drivers which provided them vertical integration.

Vertical Integration is great but not in everything and NVIDIA was precisely aware of that.

Being a small company it didn’t go about manufacturing its own chips. It used companies like TSMC to manufacture the physical chips while NVIDIA focused on its chip design and the software stack (graphics drivers).

Contracting TSMC early-on for their graphics chips was an extraordinary decision for NVIDIA that is still paying them dividends.

This way NVIDIA stuck to what it does best, while allowing others like TSMC to partner in areas where they excel.

Chapter 2 – The Platform & Compute Expansion

The 2010s are when NVIDIA was already the leader in building graphics card. Their products were the top choice for not just gamers but big clientele like Sony and Microsoft - everyone wanted to partner with NVIDIA

As early as 2006, NVIDIA started breaking ground for expanding beyond just video games. And the coming decade gave it that very opportunity.

2.1 CUDA

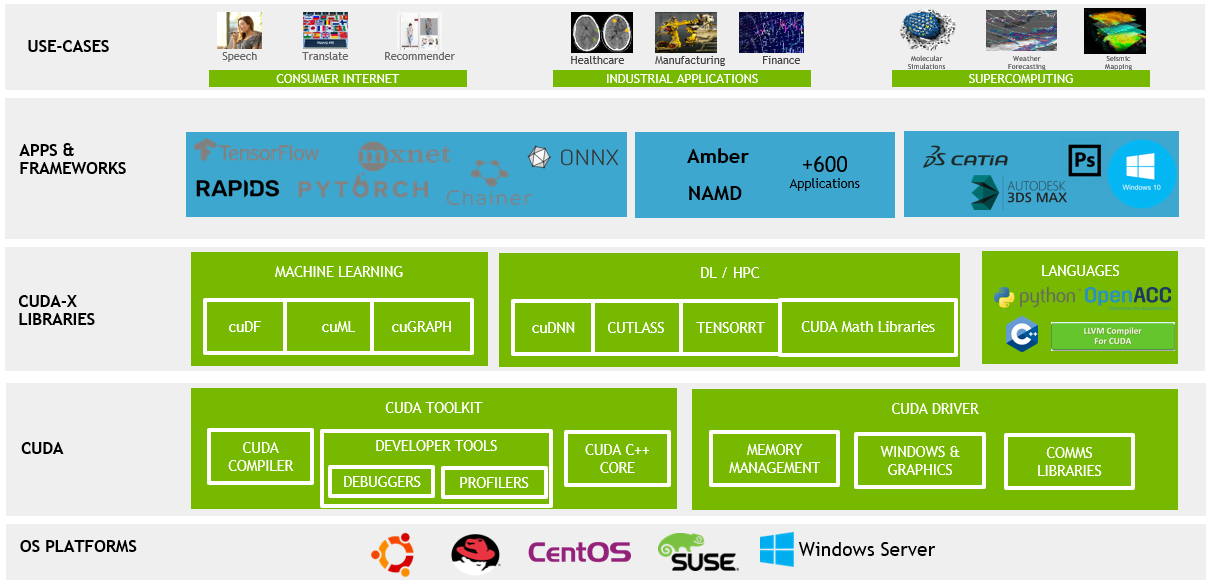

In 2006, NVIDIA came up with CUDA and it is the foundation that makes NVIDIA uniquely different from the others.

CUDA (Compute Unified Device Architecture) started out as a programming language that could allow developers to write programs that would run on the GPU.

Traditionally we write programs for CPUs and we use GPUs as just accelerators for applications that are graphically intensive like Video Games, Graphics Rendering, etc.

With time, CUDA evolved and got so mature that it is now a complete tech stack for writing GPU-specific applications.

Since CUDA is proprietary to NVIDIA GPUs, the company is the biggest beneficiary of its fruits.

On a surface level, what CUDA basically allowed us was the ability to use GPUs for not just graphics processing, but for many other tasks especially those that leverage parallel computing.

NVIDIA could see that CUDA would become a significant thing and that’s why the company was investing more than a billion dollars into developing it back in the early 2000s.

Today you basically need CUDA for many of the tasks like Image Generation, running LLMs, performing machine learning tasks like Image Recognition.

And in tasks where you don’t absolutely need CUDA you still get a significant advantage in performance when compared to any other platform.

That is just because NVIDIA’s CUDA is the most mature platform. Developers continue to develop and optimize their applications for CUDA.

2.2 Enterprise Expansion

NVIDIA was done conquering the consumer gaming segment but it didn’t rest and started venturing into data center GPUs.

Product lines like Quadro and Tesla GPUs were aimed directly at companies with complex graphical needs and those GPUs started building NVIDIA’s reputation in the big corporations.

It was NVIDIA convincing the big companies that GPU are not just for video gamers and have a wide range of capabilities that they could be used for.

All this while NVIDIA continued to build their hardware integration with the CUDA ecosystem with developers in mind.

Competitors like AMD didn’t have the CUDA moat or the reputation that NVIDIA had built in the decades of doing business and that’s why it has been hard to compete with NVIDIA.

This diversification from catering to just consumer video gamers to datacenter and enterprise was really important.

It was the clear signal that NVIDIA is no longer just the “gamer chip company” but a serious force catering to a large enterprise clientele.

2.3 Ecosystem Lock-In

Hardware alone doesn’t win wars in tech. NVIDIA learned this early-on and from the very start, NVIDIA emphasized on its own ecosystem.

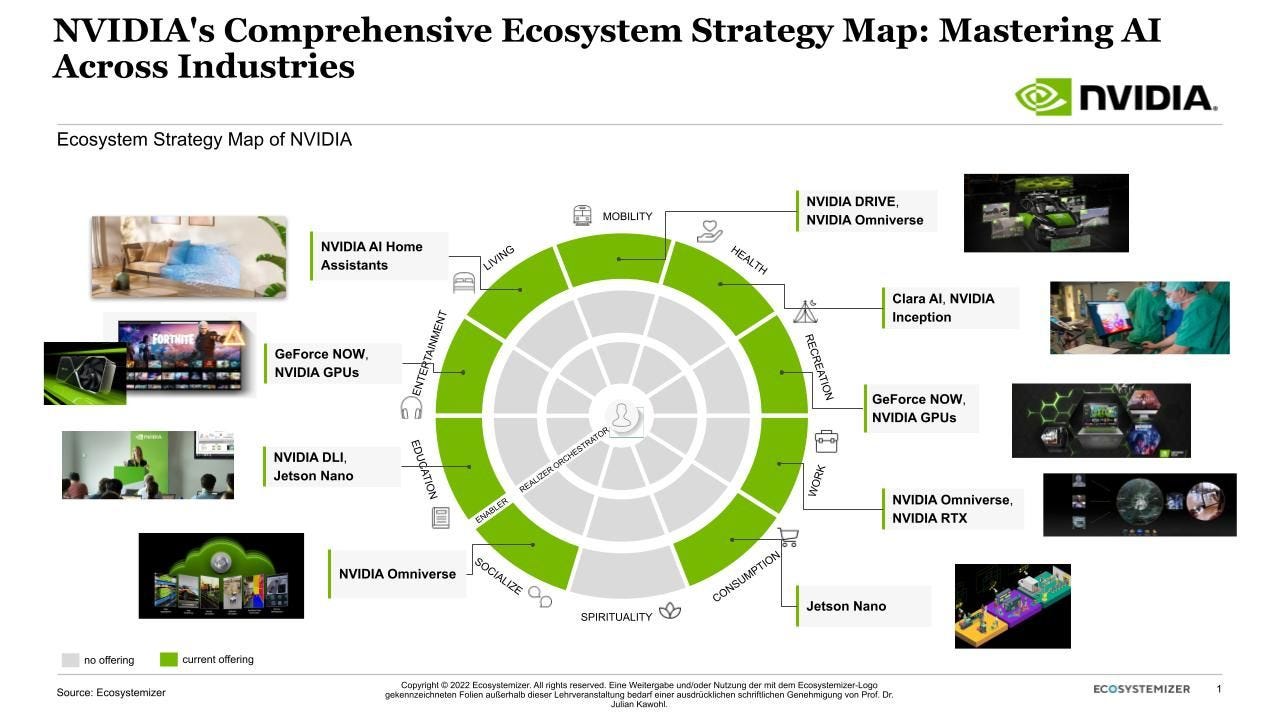

The ecosystem started with CUDA, but it expanded with new technologies like full-stack software libraries for developers.

NVIDIA’s ecosystem grew with third-party partnerships and a broad support for their ecosystem.

CUDA’s backwards compatibility down to the very first version meant that the developers can rely on the company emphasizing on broad compatibility.

For developers who were used to NVIDIA’s ecosystem, it was hard to shift because software would work better on NVIDIA hardware and their software stack still is the most widely supported.

The company was also involved in acquiring companies that it believed had something to add to its ecosystem.

NVIDIA was even about to buy the British chip design giant ARM but the deal was called off because of regulatory concerns.

If NVIDIA were able to buy ARM, it would have been the biggest acquisition in the history of the semiconductor industry.

Chapter 3 – The AI Revolution & NVIDIA’s Dominance

This chapter deals with NVIDIA’s current dominance that build upon the foundation that it had been building for years and years.

NVIDIA’s reputation as the leading GPU manufacturer and enterprise world takes a new turn when in 2012 a research paper changes everything.

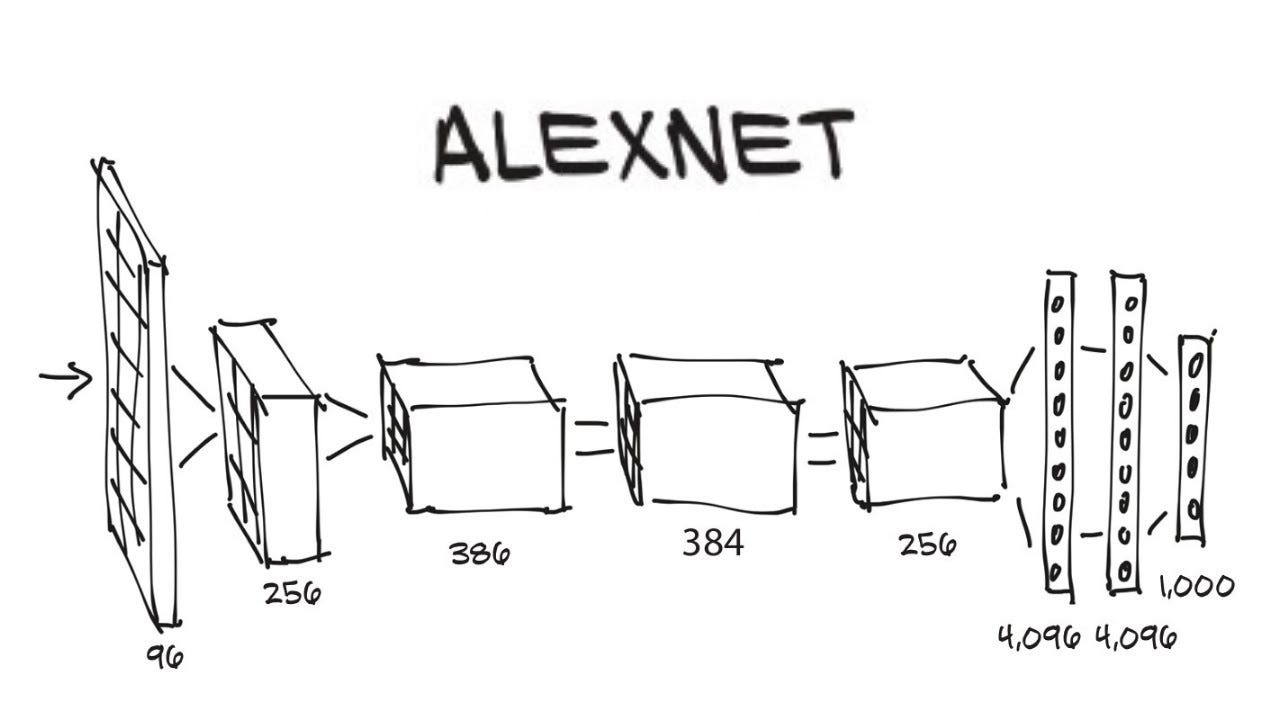

3.1 Alexnet

Alexnet is a neural network that was first presented in a research paper that came out of University of Toronto in 2012.

The paper was written by Nobel prize winner Geoffrey Hinton, and two of his students: Alex Krizhevsky and Ilya Sutskever.

In practical terms: training AlexNet required billions of arithmetic operations. CPUs of that era simply weren’t engineered to handle tens of millions of simultaneous computations in the kind of parallel way required by deep nets.

In the case of AlexNet, the network was actually split across two NVIDIA GPUs, each handling part of the computation, which substantially sped up training.

So, when the AI world discovered that it needed massive amount of math calculations performed in parallel, the GPUs built for gaming found a brand new high-impact use.

This was the architecture advantage that GPUs had over CPUs and since that moment NVIDIA stock market cap hasn’t slowed down.

NVIDIA has been spending billions of dollars on CUDA since the early 2000s working on their software stack and optimizing it for their GPUs.

This paid off for NVIDIA. 2012 was a breakthrough year for Artificial Intelligence and for NVIDIA.

3.2 Key moves & products

Right after 2012, the AI wave was clearly visible and NVIDIA was there to grab it from all sides.

The company started working on data-center GPUs that were purely optimized for AI tasks.

NVIDIA released tensor cores which are optimized for AI workloads into their GPUs.

NVIDIA also worked on low-latency interfaces that support higher bandwidth of data. Their experience in developing their previous GPU interfaces strongly helped.

By 2016 NVIDIA built DGX-1 which is a plug and play system that includes NVIDIA GPUs clustered together.

The DGX-1 was a complete platform built specifically for companies working on AI applications like deep learning but it turned out that the DGX-1 worked for LLMs too.

You can think of DGX-1 as sort of a supercomputer that is purposefully designed for AI applications.

3.3 OpenAI enters the chat

After DGX-1’s release NVIDIA’s stock tripled and it was during this time that NVIDIA started making acquisitions that it saw could help it in AI.

In 2020 NVIDIA was about to buy the British semiconductor design giant ARM but regulators blocked the deal.

Right when DGX-1 was released, NVIDIA donated one such system to a small startup that was OpenAI back in 2016.

OpenAI when it released ChatGPT back in later parts of 2022 was the fastest growing consumer application in history.

ChatGPT got 100 million monthly active users in January 2023 and it heavily relied and still relies on NVIDIA’s CUDA to this day.

ChatGPT’s release and initial success is what prompted Jensen Huang to say this:

“A new computing era has begun.”

This was a clear signal that companies especially those with an AI focus are transitioning to accelerated computing on GPUs instead of traditional CPU compute.

3.4 Gaming experience helped

What is interesting is that NVIDIA’s existing reputation helped a lot in building the brand it currently has in the AI industry.

NVIDIA already had experience shipping large volumes of chips. The company already had relations with fabs and over the chip supply chain.

NVIDIA also had the marketing muscle to support their AI products and revenue from selling graphics cards for years allowed them the freedom to invest in strategic partnerships.

NVIDIA shifted its focus to the AI industry and today it commands between 70%-95% dominance.

3.4 Challenges & competition

NVIDIA is at the top. It’s in the biggest position it has ever been in its history but it isn’t short of potential challenges.

Long time rivals AMD and Intel are also working on their GPUs as well as AI accelerators (GPUs optimized for AI). AMD is now catering to the budget gamer segment that NVIDIA seems to forget.

Reportedly, there are at least 60 startups trying to give NVIDIA’s products a hard time at AI tasks.

NVIDIA uses TSMC’s fabs for its GPUs and there are risks to TSMC’s operations as China launches threats to the island of Taiwan.

Actually, we recently covered TSMC in detail and why its operations are so crucial for companies like NVIDIA. You can checkout the detailed article down below:

White House also banned NVIDIA for selling their cutting-edge chips to countries like China in order to protect the US lead over AI infrastructure.

With the tussle between US and China, NVIDIA is a big discussion. China’s President Xi and America’s Trump will discuss the sale of NVIDIA’s latest chips in an upcoming meeting.

In addition to the geopolitical concerns, there are concerns that AI Infrastructure is spending-intensive and any headwinds could slow progress.

Still, after all these challenges NVIDIA has so far maintained its lead thanks to its vertical integration that goes from its software stack to its hardware, their business reputation and roughly 3 decades of experience shipping GPUs.

3.5 Why it matters for the world

At the present NVIDIA has a central role in the growth of AI Infrastructure and AI is the hot topic these days.

The rise of AI startups doesn’t seem to cool down anytime soon and NVIDIA is the biggest beneficiary of this raw demand for their technology.

Another form of models are here that don’t just use compute-intensive training but use inference and serve real-time responses powering autopilots and edge-devices and even smartphone apps.

Companies are investing blindly into data-centers with clusters of GPUs for AI training, building and testing their own AI models and NVIDIA is at the heart of it too.

Because of the mature CUDA ecosystem, NVIDIA is powering gaming, self-driving, robotics, cloud, AI, scientific research, all at the same time.

Interestingly, the innovations that NVIDIA helps develop through AI also helps gamers. Features like DLSS and AI-powered graphics upscalers are a result of investments in AI.

White House Joins In

In January 2025, President Trump announced Project Stargate which is a $500 billion investment into AI infrastructure in the USA with NVIDIA being a key beneficiary.

NVIDIA being a key pillar in the AI industry is a key US asset and in April 2025, the Trump Administration banned the export of the company’s H20 chips to China.

The US believes that NVIDIA’s latest chips are critical to their victory in the AI race and that’s why they’re being used as a lever in US relations to China.

NVIDIA’s newest AI chip is Blackwell which is so important that it will be discussed in the latest meeting between President Trump and China’s President Xi Jinping.

The company is now at the forefront of geopolitics acting as a lever to gain advantage for both sides.

How NVIDIA Won It All?

Progressive Vision

From the very start the vision was to solve tough problems that have a high sales volume. That is what started NVIDIA to focus into computer graphics.

As one of the co-founders later said, they realized “video games were simultaneously one of the most computationally challenging problems and would have incredibly high sales volume.”

Even after solving graphics the company didn’t stop. They saw the importance of parallel compute long before anyone else and invested a lot in it early-on.

NVIDIA figured out that the same calculations that are used in rendering frames could be used for other intensive tasks and they shifted their focus from graphics to general compute.

Ecosystem

Building a chip is a great feat on its own but it isn’t the complete story. NVIDIA’s success came from the combination of a great chip and a seamless ecosystem.

The introduction of CUDA in 2006 laid the foundation. Initially, it was just a programming language and a compiler but with time it grew into a whole ecosystem for developers.

The extra effort that NVIDIA paid to their GPU drivers also cemented them as a unique case among the rest.

This focus on vertical integration allowed NVIDIA to not only become a leader in the gaming industry but the dominant force in the AI industry.

As a commenter put it on Hacker News:

“CUDA is a huge anti-competitive moat … and that’s no accident.”

Reputation

Building a name for itself in the gaming industry wasn’t just a stepping stone for NVIDIA, it was the foundation that led the company to what it is now.

Even in the initial days Sega took chances on NVIDIA just because this small startup shipped their product even when it wasn’t a hit at first.

Shipping a product is a challenge of its own and NVIDIA built a legacy in the gaming industry shipping some of the most innovative technologies.

NVIDIA built its reputation in the gaming industry by learning timing, performance and marketing and that helped the company in the latest years.

Timing

Across NVIDIA’s history, timing has played a key role in the company’s success.

In the early 90s when PC gaming was about to explode, the company entered 3D graphics.

When advancements in deep learning took off in 2012, the company was already ready to take charge with its established CUDA platform that it had been working on for half a decade by then.

Even in the crypto boom, NVIDIA was well provisioned to profit from gamers as well as those that wanted NVIDIA cards for crypto mining.

And even today, ever since 2016 when NVIDIA donated DGX-1 to OpenAI, the company has been making investments into AI companies strategically.

Courage to pivot

From the very start NVIDIA had the guts to pivot their strategy.

Early on when their first NV1 multimedia chip failed the company pivoted by changing the next chip’s design to industry standards.

Right after establishing its presence in the GPU market, NVIDIA built a totally new presence selling GPUs to consumers. This was a bold move for the time.

NVIDIA was able to know when something wasn’t working and they pivoted.

The company’s shift of focus from gaming GPUs to AI infrastructure is among the most extraordinary steps a company could take and still the company succeeded.

What’s Next For NVIDIA?

AI Infrastructure Era

NVIDIA is no longer just a GPU maker but a company that develops AI Infrastructure. Their DGX platforms are scalable to the point of functioning like a supercomputer for AI

In addition to the raw compute power NVIDIA has DGX Cloud as offerings for companies who are more comfortable renting the compute.

In the future, most AI startups and enterprises may not even buy chips and that’s when NVIDIA-powered compute could be distributed to giants like AWS and AWS.

NVIDIA can just ship its DGX systems to cloud providers and they can figure out the service distribution to the end-user.

NVIDIA is also working on technologies like CUDA-X and NVIDIA AI Enterprise which are more optimized for AI

In the future we can see NVIDIA offer AI as a platform.

Edge Computing & Real-Time AI

When we think of AI we figure that it is something that lives totally in the cloud in massive datacenters crunching numbers somewhere in the desert.

While it is partly true, there is a growing need for running AI directly to devices that need it like cars, robots, drones.

This concept is called edge computing which means that we run AI where the action happens and not in some faraway server farm.

This already happens inside self-driving cars. Companies like Waymo have fleets of cars that use NVIDIA GPUs for taking action while on the road.

As more and more services make use of AI, the demand for edge computing would rise.

NVIDIA is betting big on edge computing because it is already powering a big chunk of autonomous robots, vehicles, smart city cameras, etc.

The company also recently launched smaller form-factor computers like the Jetson Orion that are specifically optimized for AI.

The interesting thing is that these devices also use the same CUDA stack that trains AI in data centers ensuring consistency across edge and cloud.

Robots

NVIDIA’s journey started with teaching computers how to render images through instructions (video games).

But with AI, those same chips are teaching computers to understand what they’re seeing (Computer Vision).

NVIDIA has led the shift from graphics to vision and that is why it is uniquely positioned to innovate in the upcoming robotics revolution.

“AI is software that writes itself. Robotics is AI that interacts with the real world.” said Jensen Huang in 2024.

Robots need to be able to see the world and understand and identify images through deep learning.

They need to understand context through AI Inference and make real time decisions and take action, this is edge computing.

For this robotics journey, NVIDIA has a full stack from simulation to deployment.

Even today we have warehouse automation that is being developed by Amazon Robotics and Boston Dynamics. They use NVIDIA’s compute stack for object detection, motion planning and navigation.

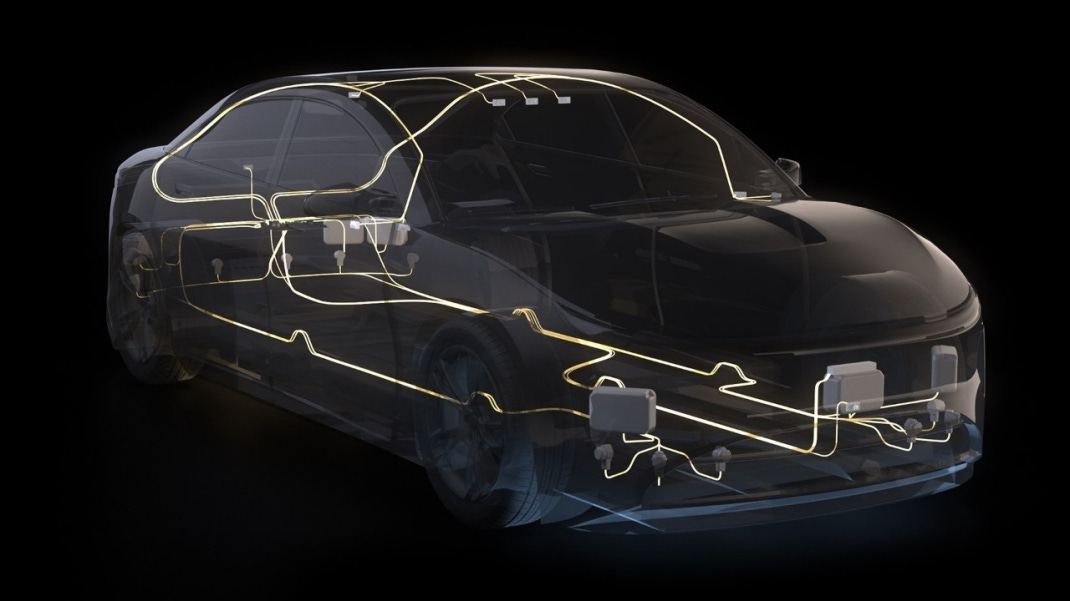

The NVIDIA DRIVE platform powers hundreds of car models from Mercedes-Benz to BYD allowing their collection of LiDAR, radars and cameras to function together and take real time decisions.

Robotics is a giant simulation. Robots observe, try to identify and make sense of what it is seeing. The robot runs simulations in its head and makes decisions.

All this is seamlessly carried out by NVIDIA stack.

Ending Notes

For me personally, NVIDIA is nostalgia. The very first computer that I played games on as a 9 years old had an NVIDIA graphics card.

I think it was a GeForce GTS 450 by EVGA. It was the first graphics card that I had ever put my hands on.

I still remember playing Need for Speed Most Wanted for hours on that graphics card.

After that, I had access to a laptop that also had a dedicated NVIDIA GPU. It was a GeForce 310m and my lord, I used that laptop to its death.

The GPU still works after more than a decade. I remember going through the painful process of installing NVIDIA drivers on Linux back in the 2018 era.

It was painful on Linux because it was a hell of an old card but the funny thing I remember is that the NVIDIA Control Panel allowed me to overclock my monitor from 60-85 hz :)

At present, I’m using an old card from AMD. And even though it works fine for me, I still want to try out the new features that NVIDIA has packed into its newer graphics cards, especially ray-tracing.

Anyways, I hope you enjoyed the article. If you’ve made it this far, would love to hear from you on NVIDIA’s journey in the comment section below!

The timing of Jensen's bet on CUDA in the mid 2000s was genius considering nobody saw AI coming. Their infastructure moat is deeper than most realize when you look at the software ecosystem they've built around the hardware. It's not just about making faster chips anymore.