The Evolution of Computers: How It All Began

You use them every day; now find out where they came from

Hey everyone

Welcome to another Nexus post.

True to our goal of making tech more understandable, especially from a Pakistani lens, today we’re sharing one of our deepest dives yet.

We’re going all the way back - before smartphones, before laptops, before even electricity - to answer one big question:

Where did computers actually come from?

You probably use one every single day. But most of us never stop to ask how these machines went from room-sized monsters to the little black rectangles we now call “phones.”

Let’s get into it.

The computer you’re using right now didn’t just fall out of the sky. It’s the result of decades of failure, constant hard work, genius minds, and tons of curiosity. There’s a whole story behind it, a lore with which people are not familiar.

Why should you care?

Because computers quite literally run everything - from your phone to your bank account and even your fridge. Knowing how they came to be helps you understand where we’re heading next.

The invention of computers isn’t just a story of machines, rather, it’s a story of human ambition. We went from mechanical calculators to room-sized giants to chips smaller than your fingernail in less than a century.

And now? Computers have changed:

The way we work

The way we learn

The way we live

They have rewired the way we even think, so the least we could do is find out about how it all began, right?

And that is exactly what we will be doing in today’s piece - We’ll start at the birth of computing, move through the electronic age, explore the personal computer boom, and end in today’s world of smart tech.

Now, let’s rewind time and see how it all began.

The Birth of Computing (2300 BCE – 1840s)

Before silicon chips and 4K monitors, computation started with something far simpler, such as counting with stones, sticks, and eventually, the abacus. These early tools laid the foundation for what was about to become one of humanity’s most disruptive inventions.

Over time, our ambitions also started growing. From Blaise Pascal’s mechanical calculator in the 1600s to Charles Babbage’s visionary Analytical Engine in the 1800s, brilliant minds kept pushing the boundaries.

Similarly, we also had Ada Lovelace, who is often considered the world’s first programmer. She was the one who saw the potential of these machines before they even existed.

The interesting thing about all these people was that they weren’t just tinkerers, doing something for fun; they had a vision for setting the stage for what was to come.

Let’s break this down, one milestone at a time.

The Conceptual Roots: Mechanical Computing Devices

Abacus (2300 BCE and onward)

The word Abacus was derived from the Greek word “abax”, which means “tabular form”. The abacus was the first counting machine. Before that? It was fingers, stones, or various kinds of natural material. The abacus didn’t come from one place. Different versions of it, which looked a bit different from each other, appeared throughout the timeline in:

Abacus wasn’t something out of a cyberpunk world; it was a simple device that a person could operate just by sliding some beads, and this device was able to perform tasks such as Addition, subtraction, multiplication, and division.

In a world without paper or electricity, the Abacus turned abstract math into a physical interaction. You could see numbers move in front of your eyes, and that was a revolutionary invention at the time.

Even today, the Abacus is used in multiple parts of Asia, particularly in Japan and China, to teach children the fundamentals of mathematics, which allows them to develop a mental arithmetic skill, other than it’s still useful up to this day for blind people, it helps them in teaching how to count.

Pascaline (1642)

Fast forward a couple of thousand years to 17th-century France, where a teenage Blaise Pascal designed and built Pascaline between 1642 and 1644. The machine was only able to perform simple arithmetic functions like addition and subtraction, with numbers being entered by manipulating its dials.

The reason Pascal invented the machine was to help his father in tax collection, so it was the first business machine too (if one does not count the abacus). And he didn’t stop after making 1 Pascaline, he built 50 of them over the next 10 years.

The Pascaline used a series of gears and wheels to perform addition and subtraction. You’d input numbers by rotating dials, and the result would show through small windows. It was mechanical, limited, and expensive, but it showed that one could simply use machines to perform mathematical functions.

Leibniz’s Stepped Reckoner (1673)

Gottfried Wilhelm Leibniz was a German philosopher, mathematician, and political adviser, important both as a metaphysician and as a logician. He was also well known for his independent invention of the differential and integral calculus.

This guy was the inventor of the Stepped Reckoner, and unlike the Pascaline, this machine was able to multiply and divide as well. Thanks to a clever “stepped drum” mechanism he designed. Not to forget, the stepped reckoner was built by expanding the French mathematician Blaise Pascal’s ideas.

Leibniz believed that logic and calculation were fundamental to understanding the universe, and his device reflected that philosophy.

In short, the idea that rational thinking could replace debate served as the root cause for the invention of this machine.

Charles Babbage and the First Programmable Computer Blueprint

Difference Engine (Proposed in 1822)

Charles Babbage was an English mathematician and inventor who is credited with having conceived the first automatic digital computer. When he looked at the mess of human error in mathematical tables, the first thing that popped up in his mind was:

”Why not let a machine do this?”

And from there, his journey to being called the “Father of Computers” started.

He designed the Difference Engine to automate the calculation of polynomial functions and print accurate tables. These things were vital for:

Navigation

Engineering

Science

The Difference Engine was more than just a simple calculator, however. It could handle a series of calculations using different variables to tackle complex problems. Unlike basic calculators, it had storage to temporarily hold data for later steps. And instead of writing results by hand, it was designed to stamp its output onto metal, which could be used to print the results directly.

The full engine, designed to be room-sized, was never built, at least not by Babbage. Mostly due to cost, complexity, and lack of industrial precision. But the vision he had was Crystal clear:

Eliminate human error

Automate calculation

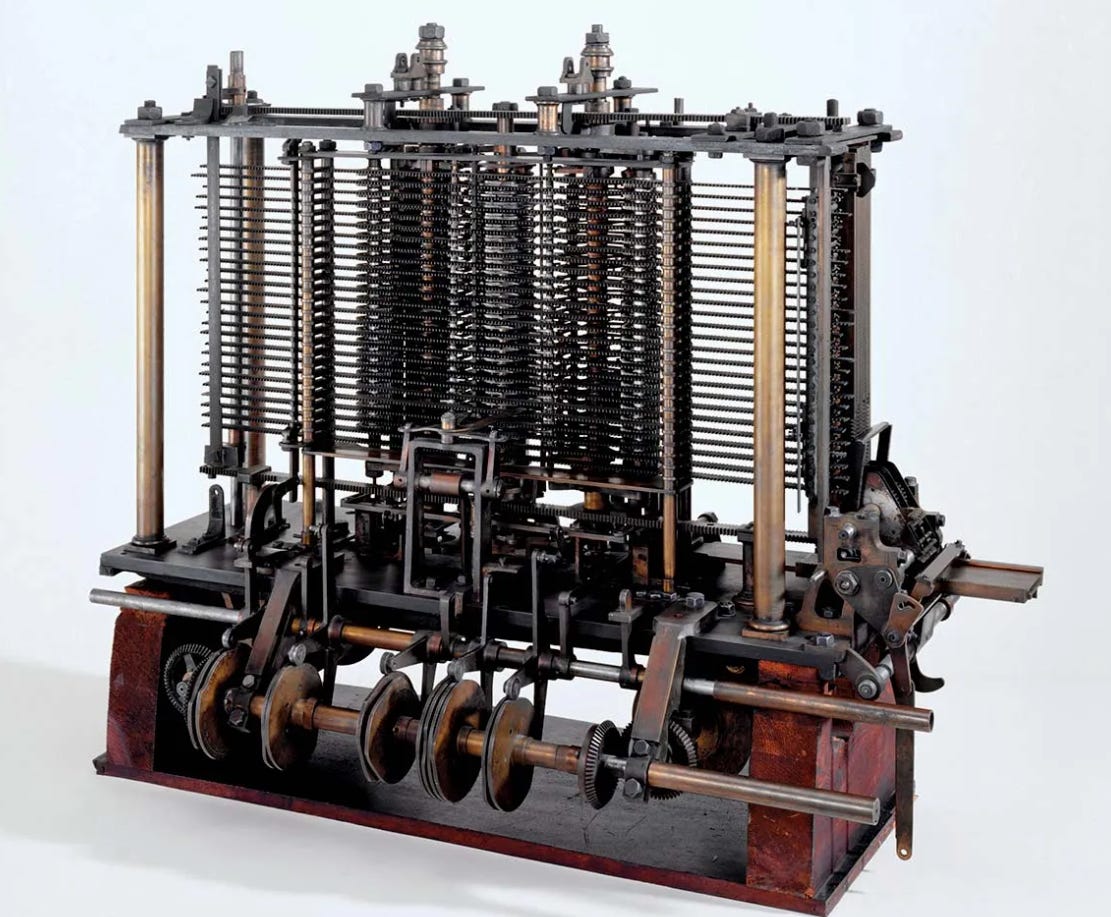

Analytical Engine (Proposed in 1837)

Analytical Engine, generally considered the first computer, was designed and partly built by Charles Babbage in the 19th century (he worked on it until he died in 1871).

When he was working on his first machine, i.e Difference Engine, which the British government commissioned, Babbage began to imagine ways to improve it. The majority of the time, he thought about generalizing its operation so that it could perform other kinds of calculations. By the time funding ran out for his Difference Engine in 1833, he had conceived of something far more revolutionary: a general-purpose computing machine called the Analytical Engine.

The Analytical Engine was to be a general-purpose, fully programmable, automatic mechanical digital computer. It was to be able to perform any calculation set before it.

The interesting thing is that there is no evidence that anyone before Babbage had ever conceived of such a device, let alone attempted to build one.

This machine was designed to consist of four components:

The mill

The store

The reader

The printer

These very components are the essential components of every computer today. The mill was the calculating unit, similar to the central processing unit (CPU). The store was where data was held before processing, similar to memory and storage in today’s computers. The reader and printer were the input and output devices.

The machine was able to loop, branch, and be programmed to handle any calculation, not just one. This thing wasn’t built, but its architecture laid the groundwork for what computers would eventually become.

The truth is that:

Babbage saw the future.

But the world just wasn’t ready for it.

Ada Lovelace: The First Programmer (1843)

Ada Lovelace was an English mathematician, an associate of Charles Babbage. She created the first program for Babbage’s Prototype, due to which she has been called the first computer programmer. Lovelace became interested in Babbage’s machines as early as 1833 when she was introduced to Babbage by their mutual friend, author Mary Somerville.

Ada Lovelace didn’t just understand Babbage’s Analytical Engine, she saw its potential. In 1843, she translated a paper about the machine and added her own extensive notes. One of them was an algorithm to calculate Bernoulli numbers, which is now considered the first computer program ever written.

Ada Lovelace was the one whose efforts have been remembered. The early programming language Ada was named for her, and the second Tuesday in October has become Ada Lovelace Day, on which the contributions of women to science, technology, engineering, and mathematics are honoured.

Ada was the one who predicted that machines like the Analytical Engine could one day manipulate symbols, create music, and express ideas, not just crunch numbers.

The truth is:

She wasn’t just coding.

She was forecasting the future of computing.

From Human Computation to Machine Automation

Long before machines took over the job, computation was human. People were employed as “computers,” manually typing in numbers for different purposes.

The devices you saw earlier, including the abacus, Pascaline, and Babbage’s engines, weren’t just clever gadgets. They were the start of a new journey. They laid the groundwork for automation, algorithms, and abstract thinking - the core of modern computing.

All this didn’t just help speed things up. It changed the entire nature of how we solve problems. Machines didn’t just assist humans, they started to replace them in the thinking process. Well, of course, you needed humans to operate them, but the machines did most of the work when it came to the actual calculations.

And this was just the beginning of a new era.

The Generations of Early Electronic Computers (1930s – 1970s)

Mechanical computing was a brilliant start. We had gears and levers solving problems humans couldn’t handle alone.

But the real shift?

It came with electricity.

That’s when machines stopped just moving and started thinking faster.

In this section, we will be diving into the ENIAC, UNIVAC, and Colossus, the giants that were the most important pieces in the electronic age. These weren’t just upgrades. They were a whole new species of machine.

Over time, more computers followed, each laying the groundwork for the modern CPU sitting in your phone or laptop right now. But how did we get from those room-sized beasts to what you carry in your pocket?

Let’s break it down.

Setting the Scene: War, Power, and the Need for Speed

The rise of electronic computing wasn’t born in peace, it was forged in crisis.

World War II demanded speed, precision, and power. And the traditional manual calculations weren’t enough for all that; it just couldn’t keep up with the demands of the world.

Governments needed machines that were able to:

Crack enemy codes

Predict missile trajectories

Analyze military data

They wanted machines to perform all these tasks at speeds no human could match. And guess what the result was?

A race for electronic brains.

Not for convenience.

But for survival.

Turing’s Legacy: The Theory Behind It All

Let me tell you about the guy who practically laid the foundations of everything related to computers - Alan Turing. He was a British mathematician and logician who made major contributions to:

Mathematics

Cryptanalysis

Philosophy

Mathematical biology

All his contributions, over time, have evolved, and now we know them as computer science, cognitive science, artificial intelligence, and artificial life.

In 1936, he introduced the idea of a universal machine. It proposed a machine that could read instructions, process data, and produce output. Sound familiar? That’s the DNA of every modern computer.

Turing didn’t stop at theory. During WWII, he cracked the Enigma code, giving his country and allies a massive edge over the Germans and pushing the world closer to programmable machines like Colossus (we will talk about this machine in a bit)

I bet many of you must have heard of the famous Von Neumann architecture, still taught in CS classes today, right? Yeah, that structure with memory, input/output, and control was inspired by the legendary Turing’s machine.

In short, Turing didn’t just imagine the computer.

He gave us the why, the how, and the urgency to build it.

1st Generation Computers (1946–1957)

This era kicked off the electronic computing revolution. These weren’t some cute computers with RGB lights in them; these were room-sized beasts. Consumed electricity like it was nothing, and ran hot enough to bake bread.

But despite all that, these big machines cracked open a new world and laid the groundwork for everything that came next.

The Technology Behind the Machines

These machines ran on vacuum tubes. Vacuum tubes were like fragile glass components that acted as electronic switches.

For input, they used punch cards. Punch cards were literal paper with holes that allowed people to feed in data and instructions.

And for storage? They relied on magnetic drums, which spun like old-school washing machines to hold memory. This was a big invention at the time, it was the cutting edge back then.

Key Machines of the First Generation

The first ever electronic computer was called Colossus. It was built in Britain during WWII. Colossus wasn’t just a codebreaker, it was the first programmable computer and it helped crack Nazi encryption and proved that machines could outthink humans at speed.

After the war, the U.S dropped this monster, which was known as ENIAC. This computer was very large, it was filled with 18,000 vacuum tubes. It was a true multi-purpose beast which could be reprogrammed to handle different tasks such as:

Artillery calculations

Weather prediction

Later, the first ever commercial computer was introduced, which was known as UNIVAC I. This wasn’t just a lab experiment; businesses and government agencies relied heavily on it. This computer went so viral when it correctly predicted the outcome of the 1952 U.S. presidential election.

Many other computers were included in this generation as well, such as:

EDVAC

IBM-701

IBM-650

Disadvantages of First-Generation Computers

These machines might’ve been a breakthrough, sure. But let’s not pretend they were perfect. They came with a truckload of problems:

They were massive

They were very expensive

They required constant maintenance

They consumed an absurd amount of electricity

They were very hard to program

2nd Generation Computers (1958–1963)

This is the era where computers finally stopped acting like high-maintenance monsters and started becoming a bit more reliable. We moved on from vacuum tubes (which were basically glass grenades) to transistors (a game changer in every way).

These computers were still big, but now they were faster, cheaper, and didn’t overheat like a toaster left on overnight. It was the beginning of computing for business, science, and even early military systems, not just top-secret labs.

The Technology Behind the Machines

Transistors replaced vacuum tubes, and that alone changed the game. They were smaller, cooler (literally), and way more efficient.

In the case of input, punched cards were still in the picture, but when it comes to storage, magnetic core memory started showing up. You can think of it as the ancestor of your RAM.

For output, printers and early displays began to pop up.

Programming also got a facelift. Low-level assembly was still there, but now high-level languages like FORTRAN and COBOL came into play, which made computers usable for the average Joe who wasn’t a math genius.

Key Machines of the Second Generation

Now, let’s discuss some of the most important computers of this generation.

IBM 1401: One of the most popular business computers of the time. It brought computing to offices, not just research centers.

IBM 7090: A transistorized monster used for scientific calculations and early space missions. NASA used this thing.

CDC 1604: Developed by Control Data Corporation, one of the first commercial computers to fully utilize transistors.

Many other computers were included in this generation as well, such as:

IBM 1620

IBM 7094

UNIVAC 1108

Disadvantages of Second-Generation Computers

Sure, this was a huge leap forward, but it still wasn’t smooth sailing. These machines had their own set of headaches:

They were still physically large (just less so than before)

They required specialized environments (air conditioning, power backup)

Programming was easier, but still far from user-friendly

The hardware was better, but still prone to failure

The storage was limited

Input/output devices were slow

3rd Generation Computers (1964–1970)

This is when things started to look less like science experiments and more like actual machines that people could use without a PhD in electrical engineering. Guess what the big upgrade was? Integrated Circuits! These were tiny chips that replaced dozens of transistors.

This was the one shift that made computers faster, smaller, and way more powerful.

Because of the computers of this generation, computers became more than just military toys or business machines. They were inching closer to the public. This is the era where time-sharing started, meaning one computer could serve multiple users at once.

The Technology Behind the Machines

The biggest change was switching to integrated circuits. These chips packed multiple transistors into a single unit, meaning less size, less heat, and more speed.

Talk about memory, we still had magnetic core memory, but it got faster and more stable. Storage tech also took a big jump; now you had removable disks, not just magnetic drums.

Another big change?

Monitors and keyboards were introduced and started replacing punched cards. Computers were finally becoming a little more human-friendly.

Other than that, programming languages had also matured. BASIC and PASCAL showed up, making software development more approachable.

Key Machines of the Third Generation

Now, let’s discuss some of the most important computers of this generation.

IBM System/360: This one was the star of the show. First truly family-based computer line. The same software could run across different models. Businesses, government, science. Quite literally, everyone used it.

Honeywell 6000 series: A powerful system for business and academic computing. Introduced features like multiprocessing.

DEC PDP-8: Often called the first “minicomputer.” It brought computing into smaller labs and even schools. A big step toward personal computers.

Many other computers were included in this generation as well, such as:

IBM-370/168

TDC-316

Disadvantages of Third-Generation Computers

Yeah, they were better - no doubt. But perfect? Not even close. Here’s where they still struggled:

Integrated circuits were revolutionary, but still expensive to manufacture

Systems were complex and needed trained operators

Heat and power issues weren’t gone, just reduced

Still not affordable for home users

Stability issues in software and OS design

The Rise of Personal Computers (1971–1999)

The invention of the microprocessor was the real game-changer..

Before this, computers were locked behind the gates of governments and giant corporations. But in the early 90s Intel 4004, the first commercially available microprocessor. This tiny chip was able to pack the entire brain of a computer into something that could fit in your hand. Later on, the Intel 8080 landed, giving birth to the first wave of personal computers.

These inventions changed everything.

Computers were no longer intimidating machines for engineers. They were turning into tools for creativity. A whole new generation of tech companies, visionaries, and hackers was about to step in, and nothing would ever be the same again.

Let’s break it all down.

The Microprocessor Revolution (Early 1970s)

Up until this point, computers were still room-hogging monsters, even if they had gotten smaller and smarter. But in 1971, a man named Ted Hoff at Intel designed the Intel 4004. This tiny chip packed all the processing power a machine needed, on a single piece of silicon.

That was the birth of the microprocessor, and it flipped the entire game.

By 1974, Intel came out with the 8080, a more powerful chip that would go on to power early personal computers. Suddenly, computing wasn’t just for scientists, governments, or corporations. Now, it was inching toward something you could put on a desk at your home.

What’s interesting about these computers is that, due to the invention of microprocessors, computers took a big leap.

- The size of these computers was reduced massively

- The affordability of these computers was great

- The power consumption was minimal

And despite all of this, the computation of these computers was insanely good. This was the foundation for the personal computer boom. The revolution had officially left the lab and entered the garage.

Altair 8800 (1975): Hobbyists Enter the Game

The Altair 8800 was created by Ed Roberts, founder of MITS (Micro Instrumentation and Telemetry Systems). It’s widely considered the spark that lit the personal computer revolution.

This machine was basic. No screen. No keyboard. No friends. Just a metal box with blinking lights and switches. It was sold as a DIY kit through Popular Electronics magazine, which made it very popular among hobbyists and tinkerers.

But it wasn’t just the hardware that mattered - it was the movement it inspired.

The Altair gave rise to the Homebrew Computer Club in Silicon Valley, a legendary group where tech pioneers gathered, swapped ideas, and laid the foundation for the modern tech world.

Psst… want to know a secret?

A young Bill Gates and Paul Allen saw the Altair and decided to write a version of BASIC for it. That small step became the first product of Microsoft, and the rest is history.

In short, while the Altair 8800 wasn’t the best product. But it gave birth to a very important era.

Apple, IBM, and Commodore: The Giants Take Over

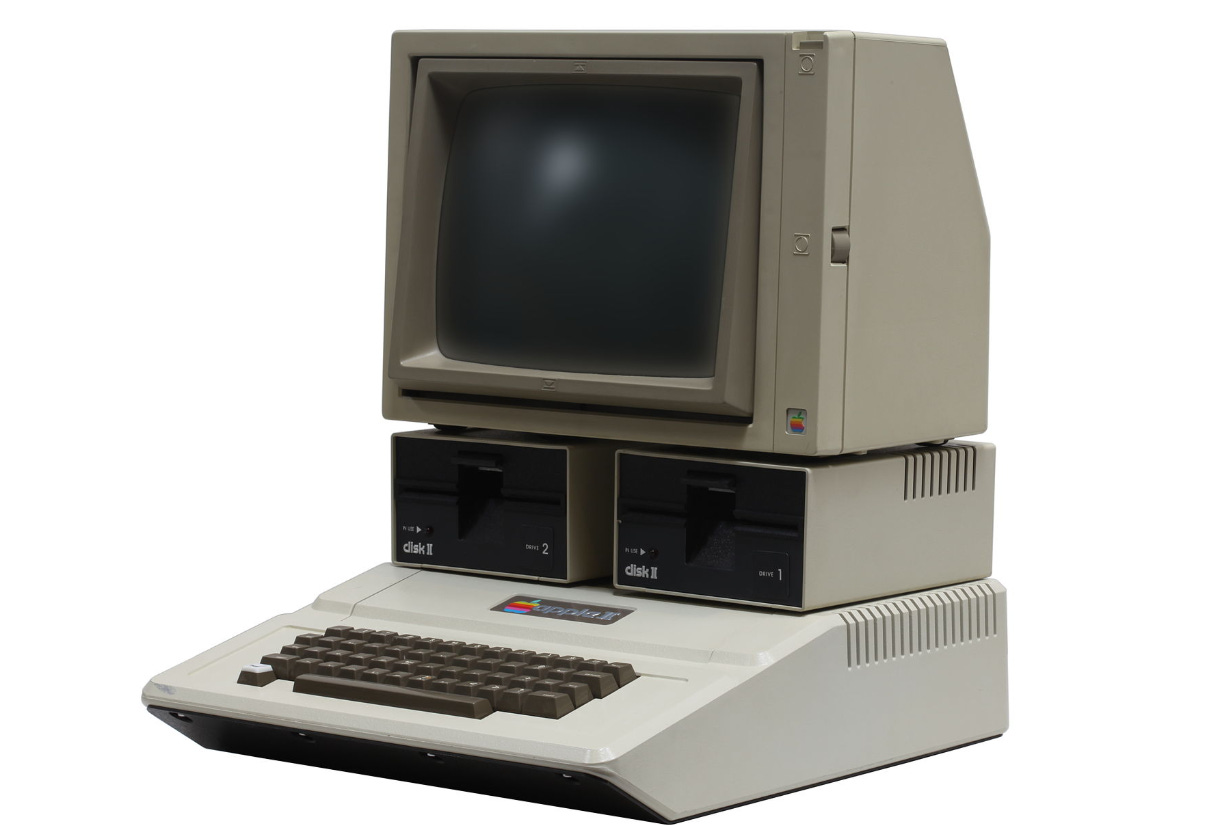

Apple I & II (1976–77)

In the mid-1970s, two guys from California changed the face of computing forever. Yes, I am referring to Steve Wozniak and Steve Jobs.

Wozniak was the engineering genius, the guy who could build brilliant machines with minimal parts. While Jobs was the visionary salesman, he saw what tech could become, not just what it was.

The Apple I was Wozniak’s baby. It was one of the first computers sold fully assembled; it was a plug-and-play kind of computer. That alone made it stand out in a world dominated by DIY kits like the Altair.

It was pretty basic, It didn’t come with a case, monitor, or keyboard; users had to provide those. But what it did offer was ease. And in 1976, that was revolutionary.

Jobs, on the other hand, handled the marketing, convincing a local computer shop to buy 50 units up front. And guess what? He did an amazing job selling the stock.

Then came the Apple II, and this one changed the game completely.

Color graphics

Sound

Expandable hardware

Sleek plastic

And the best part?

It wasn’t just for nerds anymore.

It was for schools, homes, and small businesses.

The Apple II became one of the first mass-market personal computers, and its success turned Apple into a serious player.

Woz made it work

Jobs made it matter

IBM PC (1981)

By 1981, personal computers were gaining steam, but mostly among hobbyists and small players. But, pretty soon… things started to change.

The IBM PC (Model 5150), known simply as the IBM PC, dropped, and it flipped the industry. It was created by a small team within IBM, led by Don Estridge at the IBM Entry Systems Division in Boca Raton, Florida.

Many of you might be wondering: “What made this computer a Big Deal?”

Tons of things made it a big deal:

Legitimacy:

When IBM spoke, the business world paid attention. This wasn’t a garage experiment, it was a machine backed by corporate muscle.

Open Architecture:

IBM used pre-manufactured parts and allowed other manufacturers to build compatible machines. That openness led to a flood of clones and a whole PC ecosystem.

MS-DOS:

Instead of building their own OS, IBM turned to a small company called Microsoft. Bill Gates and his crew supplied MS-DOS, which quickly became the standard operating system across thousands of PCs.

The IBM PC wasn’t flashy. But it defined the market, and it established the Windows + Intel alliance. And this combo? It still dominates the market.

Commodore 64 (1982)

The Commodore 64 was developed by Commodore International, led by Jack Tramiel, a fierce businessman who believed in making computers affordable for the masses.

The machine was engineered by a team under Robert Russell. Bob Yannes (who created the famous SID sound chip) and Albert Charpentier (the main hardware designer) both played an important role in the manufacturing of this machine.

Commodore wasn’t like Apple II, it was sold as a standalone unit (just the keyboard-computer combo). Users had to plug it into an external monitor to use it. This was part of what made it so affordable and appealing to home users; it used hardware people already had.

It was a multipurpose machine, and it was available for the average Joe. Different people used this machine for various tasks, such as:

Playing legendary games like Summer Games and Impossible Mission.

Learning to code using BASIC.

It was even used in classrooms; a whole generation got introduced to computing via the C64. Other than that, there were other reasons as follows as well, due to which this machine mattered in the 1980s era:

It was massively popular among people

The value for money this machine was providing was insane

It allowed users to program it right out of the box

It allowed users to play games on it

The Software Layer: Operating Systems and Ecosystems (Mid 1980s)

So far, we’ve talked about chips, wires, and metal boxes. But the truth is, hardware without software is simply useless. And as machines shrank and hit people’s desks, it was software that brought them to life.

Programming languages like BASIC kicked the doors open for everyday users. Suddenly, you didn’t need to be a lab coat-wearing genius to write a program, you just needed a curious mind and a blinking cursor.

Then came the operating systems. MS-DOS ruled the IBM world, text-based but powerful. It became the backbone of early computing. It wasn't pretty, but it worked. And more importantly, it standardized the experience.

After that, other important applications, including word processors, spreadsheets, and even games, were launched, and for the first time, people had a reason to own a computer beyond just tinkering.

This was more than just a software layer.

It was the birth of ecosystems.

Machines now had a purpose.

The GUI Revolution: Point-and-Click Era Begins (Mid 1980s – Early 1990s)

This was the moment computers stopped being just for nerds who knew how to use the command line interface and started becoming beginner-friendly machines.

It all began at Xerox PARC, where the idea of a Graphical User Interface was born. But Xerox didn’t capitalize on it.

Then came Apple.

In 1984, the Apple Macintosh took the stage. It introduced everyday users to point-and-click computing. No more command lines, just visual simplicity.

Microsoft also wasn’t far behind. In 1985, it launched Windows 1.0, a GUI layered over MS-DOS. It was not the best, sure, but it was the start of a visual computing empire.

And let’s not forget Linux (btw I use Fedora). Though it started out as a command-line system in the early '90s, today it powers everything from Android phones to desktops with awesome GUIs like GNOME and KDE.

Fast forward to now, we have:

Windows 11

macOS 15 Sequoia

Modern Linux distros

What started with icons and a mouse click is now full-blown immersive computing. And it all began here.

1990s: Personal Computing Becomes Normal

By the time the ‘90s hit, computers weren’t rare. They were everywhere: homes, classrooms, and offices. The idea of a personal computer had fully landed.

Even the GUI game was getting better with each passing day.,

Windows 95 dropped a clean GUI and plug-and-play.

Microsoft Office became the productivity standard.

Kids were playing Minesweeper.

Students were typing up reports.

Families were logging onto the early internet, which was quite slow, but it was game-changing.

Speaking of the internet, if you are interested in finding out how the Internet came into being, how it went from 0 to hero. Then check out this post, How We Got Online: History Of The Internet.

In short, in the whole 1990s era, the internet emerged as well and turned PCs from standalone machines into connected gateways. This was the moment computers stopped being a luxury or nerdy hobby… and became essential.

The Age of Smart Computing (2000–Present)

This era was where things started getting advanced. In the past couple of years, so many new technologies have emerged. We’ve moved far beyond desktops and floppy disks. Today’s computing is fast, connected, and often invisible. But all this didn’t happen overnight. The 21st century can be broken down into three major waves of change that reshaped the world of machines.

- First came the last desktop decade (2000–2006), when computing still lived on your desk and the internet was growing, but not yet mobile.

- Then came the mobile explosion (2007–2014), driven by smartphones and lightweight laptops, which put computing into our hands and pockets.

- Finally, the rise of the cloud and artificial intelligence (2015–Present) redefined computing as something that’s always on and always synced.

Let’s unpack each of these phases and see how computing evolved from something you use to something your whole world depends upon.

Era 1: Before Laptops: The Last Desktop Decade (2000–2006)

In the early 2000s, desktop towers were still the heart of computing. Whether it was for work, study, or gaming, the average Joe interacted with a big CRT monitor, wired peripherals, and heavy-duty hardware that even John Cena couldn’t lift.

Laptops were there, but they were expensive, and mostly used by professionals or universities. Back then, people didn’t care about portability.

Broadband Replaces Dial-Up

Talk about the Internet.

Dial-up was dying. It was slow and unreliable. Then came broadband. DSL and cable connections that were faster, always on, and didn’t interrupt your landline. People started shifting to broadband due to it being good, but it took some time to be fully adopted.

Meanwhile, most users were still connected via wired Ethernet, and while Wi-Fi was becoming known, coffee shops and a few lucky homes started installing routers. But it wasn’t reliable, and wired connections were still the default.

Heavy Local Apps Were the Norm

Back then, software didn’t mean “click and download.” It meant going to a store, buying physical disks, and installing big, standalone programs off CDs. Think:

Microsoft Office for school and work

Adobe Photoshop for anything visual

Winamp, Windows Media Player, and iTunes to manage your music

And if you were into gaming? You were popping in CDs, installing locally, and dealing with license keys or errors. And there were no cloud saves, no syncing, just files dumped into your hard disk.

In short, everything lived on your hard drive, or it didn’t exist at all.

Windows XP Redefines Usability

In 2001, when Microsoft released Windows XP, it hit a sweet spot:

Clean interface.

High stability.

Long-lasting support.

In short, Windows XP quickly became the OS of the decade. It powered homes, schools, offices, and even ATMs, a true workhorse OS that stuck around much longer than Microsoft expected.

The Web Gets Interactive

In 2004 - 2006, the term “Web 2.0” entered the scene. A wave of richer, more dynamic websites started rising from the dark:

Gmail - made email fast and modern

Facebook - started in colleges, spreading rapidly

YouTube - kicked off video streaming for the masses

In short, Web 2.0 replaced the dull old static Web and took the throne.

Era 2: Mobility Takes Over (2007–2014)

This was the era when the computers broke free from the desk. The rise of powerful laptops, smartphones, and tablets meant computing was no longer a place you went, it was something you carried with you.

Laptops Become the Default

By the late 2000s, laptops had become affordable and portable enough to replace desktops. And for most people, they weren’t luxury items anymore, they became a necessity. Whether you were in school or just browsing at a café, laptops became the default choice.

The Smartphone Boom Changes Everything

Then came 2007, and suddenly everything changed. Apple dropped the iPhone, and in the next moment, the internet wasn’t just on your desk or lap, it was in your pocket.

Later on, the first Android phone was also introduced, which had its own advantages.

But the point is

The need to constantly carry a big laptop with you or going to a cafe to use a computer was finished. Because smartphones were here, and they were more than just some cool gadgets. They were primary computing devices. You could literally do everything on it.

Tablets, Wi-Fi, and Apps: It All Came Together

In 2010, Apple launched the iPad, and suddenly computing had a new form. These iPads were bigger than a phone, lighter than a laptop, and fully built around touch. But really, this wasn’t just about the device. It was about how it brought all the pieces together in one picture.

Smartphones were already out.

Tablets were joining in.

And now, with Wi-Fi becoming normal everywhere, you just needed to hit connect and you were ready to go.

And remember how you needed to bash your head with CDs to install software? That got solved as well. You could just open the App Store or Google Play, hit download, and your software would be there in seconds.

The world had completely changed; you didn’t need a bulky tower or a mouse. Just a screen, a signal, and a tap, and people loved it. Computing was now completely mobile and totally personal.

Era 3: After the Cloud: Smart Everything (2015–Present)

By the mid-2010s, everything had changed; cloud wasn’t something that people knew from movies, it was reality. Every single device had gotten smarter and better. On top of that, everything started to live on the cloud instead of hard drives.

The Cloud Takes Over

Everything started living in the cloud, and became accessible from anywhere, at any time. Google Drive, Dropbox, iCloud… You could just store your data on them and never worry about carrying your files around with you.

Talk about software.

It drastically changed as well. You didn’t install things; you simply opened a browser.

- Google Docs replaced Word.

- Figma replaced Photoshop.

Everything from writing to design to meetings started happening on the web. No downloads needed.

While the cloud allowed people to store their data on it.

It also provided another important feature - Seamlessness.

Start writing on your phone, finish on your laptop.

Take a photo on your tablet, and access it from your desktop.

Everything became synced

Everything became a part of one connected experience.

All because of the Cloud.

Devices Start Thinking

This is also the era when computers stopped just following instructions, they started making decisions.

AI and machine learning went from spam filters to helping people learn something and so much more.

Voice assistants like Siri, Alexa, and Google Assistant made it normal to talk to your devices. And last but not least, algorithms began running the world, they told you what to watch, what to buy, and even how to drive.

Your devices weren’t just tools anymore, they were more than that…

The Computer Is Now Everywhere - and Nowhere

Tech is everywhere. What do I mean by that?

Think about it: your TV, speaker, watch, car, and even your fridge might be connected to the internet. This is the era of IoT and ambient computing, where tech doesn’t sit in front of you; it’s all around you, working silently in the background.

You wake up, and your lights adjust.

You speak, and a smart assistant responds.

Technology has become invisible yet omnipresent.

And what about desktops?

They’re still here, but they’ve evolved.

Desktops of this era are now leaner and more efficient than ever. But they’re mostly used by creators, gamers, engineers, or people who have some serious business. Sure, you can use it to check emails or scroll feeds. But you got your phone for that.

In short, we have moved from using a computer to living inside a network of connected devices. And now? These devices are just everywhere.

Where We Started. Where We Are.

If you’ve made it this far, Kudos to you! You just time-traveled from the abacus to a world where a whole computer can fit on your wrist.

We started with manually operating machines, moved to room-sized beasts that cracked wartime codes, saw desktops that wouldn’t let you move, then watched phones, tablets, and the cloud turn it all personal and portable.

Now? The computer exists everywhere around you. It has turned into an ecosystem from a device.

And it’s still evolving.

We’ve got humanoid robots, AI-driven systems, and global intelligence being baked into everything. If that sparks your curiosity, check out my post about humanoid robots.

Because one thing’s clear:

The journey of computing isn’t over.

It’s just heating up.

The future?

Smarter. Smaller. Everywhere.

Other than that, I do have some questions for you guys. Feel free to answer them or share any of your stories that are linked to computers.

How do you feel about a future where machines might think for us?

Do you remember the first time you touched a keyboard or saw the internet load?

What was your moment of fascination with computers?

Lastly, if you found this piece valuable, drop your thoughts below. I read and reply to every comment.

Stay safe, stay secure.

Until next time…..

Want to build something on your own?

Looking for people who get it, who are figuring things out just like you?

The Wandering Pro is a quiet corner of the internet where freelancers, tech workers, and first-time builders gather to make steady progress - one challenge, one win, one project at a time.

Join The Wandering Pro; find your rhythm, share progress, and grow with a community backed by decades of real-world building experience.

“Commodore wasn’t like Apple II, it was sold as a standalone unit (just the keyboard-computer combo). Users had to plug it into an external monitor to use it. This was part of what made it so affordable and appealing to home users; it used hardware people already had.”

old school. love it. my college had both of these on display in a mini museum. it was great.

so happy that there is still this kind of content, very informative!